Projects on

Document Intelligence

Methods

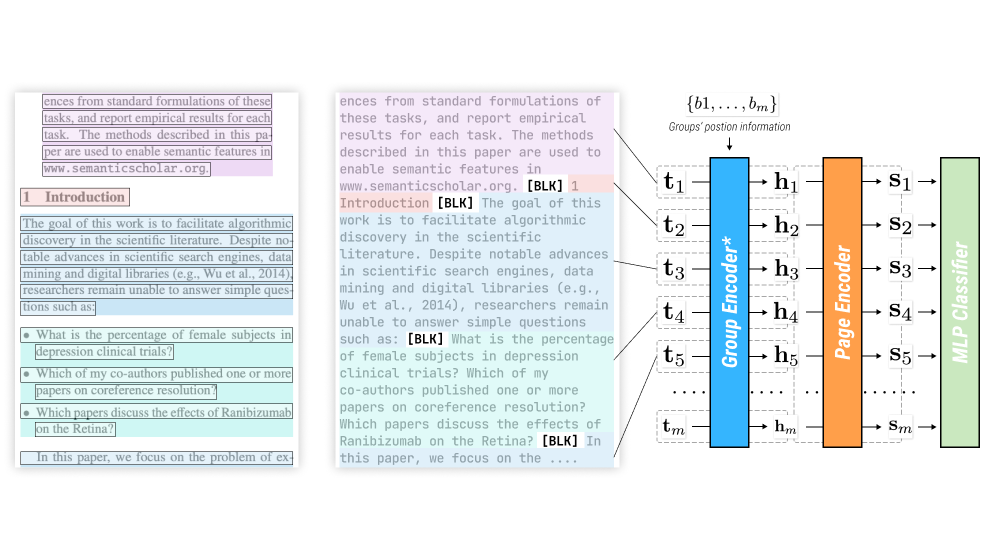

Visual Layout Structures for Scientific Text Classification

Parsing the semantic categories for texts in scientific papers is one of most important and fundamental tasks for automated information extraction and parsing for scholarly documents. Although these documents are usually intricately styled, most existing work only uses the flattened text for modeling. We propose to use visual layout structures (VILA) to improve the text classification task, and the two proposed models either bring up 1.9% accuracy improvements or 47% efficiency improvements.

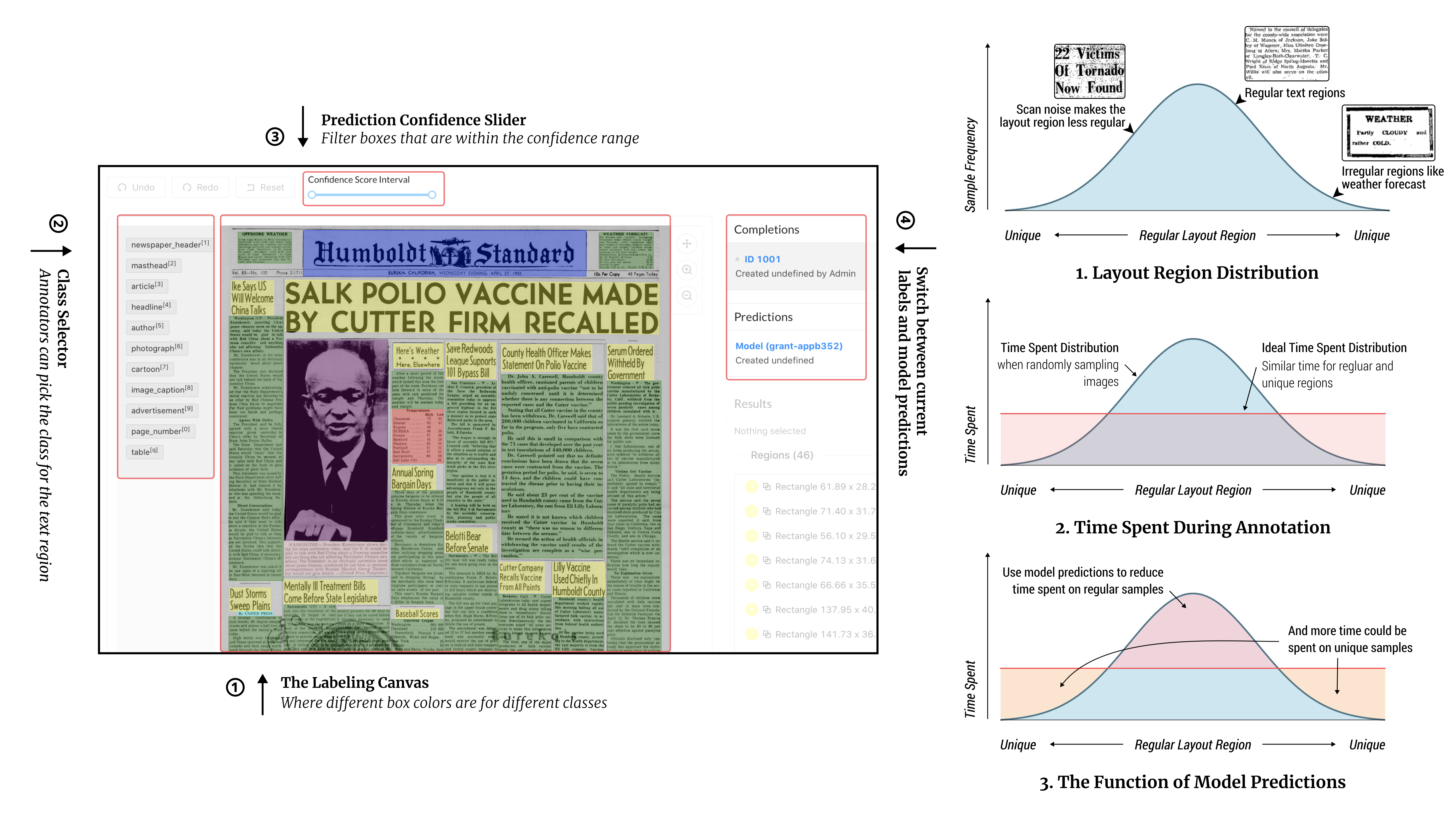

Object-Level Active Learning Based Layout Annotation

Object-Level Active Learning Based Layout Annotation, or OLALA, is a method that aims at simplifying the annotation of new layout detection datasets. It builds upon existing active learning methods, selecting only the most important object regions for labeling in practice. With the help of OLALA, we record an up-to 50% labeling efficiency improvement with less than 2% model accuracy reduction in simulation experiments.

Tools

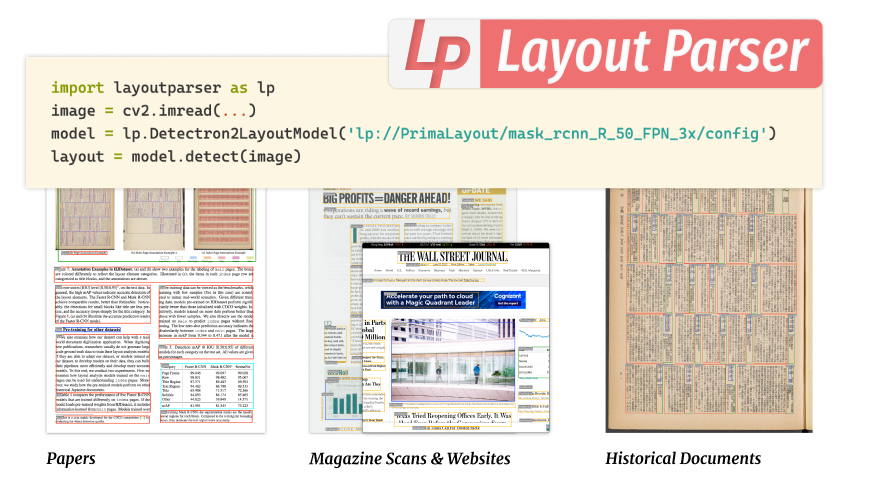

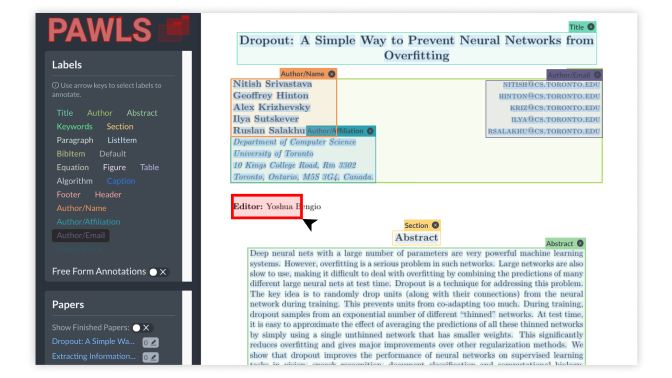

Multi-Modal Document Analysis

Multi-Modal Document Analysis (MMDA) is our latest project that helps the joint analysis of document images, layouts, and texts. It aims to bring recent advancements from NLP research together with the existing technology for document layout analysis, and produce models and pipelines with yet even better accuracy and efficiency.

PACER Docket Parser

This tool is developed for parsing the PDF-based docket files and generates a structured representation for the case information that can be used for downstream analysis with ease.

Datasets

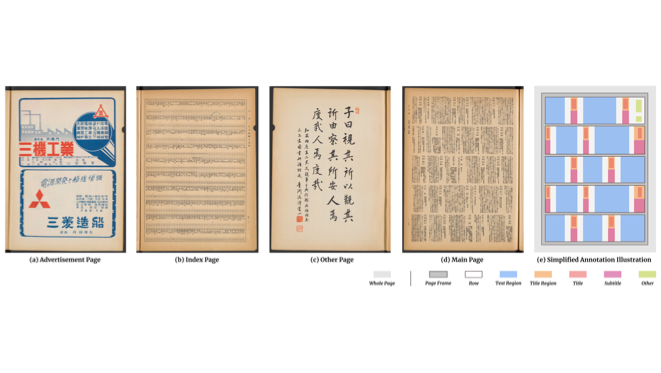

HJDataset

HJDataset is a large dataset of Historical Japanese Documents with Complex Layouts. The inputs are document image scans and the outputs are a collection of document region bounding boxes denoting the visual layout structure and reading order of the regions. It contains over 250,000 layout element annotations of seven types.